On August 5, 2025, OpenAI announced the release of two new models (gpt-oss-120b and gpt-oss-20b), marking the company’s first open-weight language models since GPT-2, that was launched in 2019. These models represent a pivotal shift in the AI landscape, delivering advanced reasoning capabilities and competitive performance with proprietary models, all under the Apache 2.0 license.

This move comes at a time when open models are gaining significant traction, unlocking new use cases and customization opportunities—particularly in contexts where models need to run locally for privacy or security reasons.

Architecture and performance

The GPT-OSS models leverage a Mixture-of-Experts (MoE) architecture—a design that partitions an AI model into specialized “experts” while activating only a subset for each input—making them exceptionally efficient. The gpt-oss-120b activates just 5.1 billion parameters per token from its total of 117 billion, while gpt-oss-20b activates 3.6 billion out of 21 billion total parameters. This architecture allows the larger model to run on a single 80GB GPU, while the smaller variant requires only 16GB of memory. Wow! 16GB is genuinely impressive.

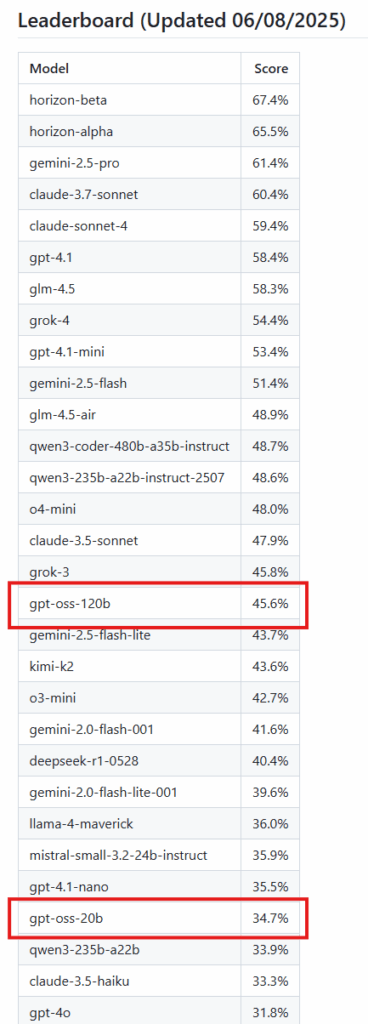

The performance metrics are remarkable: gpt-oss-120b achieves results nearly equivalent to OpenAI o4-mini on major reasoning benchmarks, while gpt-oss-20b approaches o3-mini’s performance despite being six times smaller. Both models particularly excel in competitive mathematics, where they can leverage extremely long reasoning chains (over 20,000 tokens per problem).

Variable reasoning

One of the most innovative features is variable-effort reasoning. The models support three reasoning levels (low, medium, high) configurable through system prompts, enabling developers to balance performance, latency, and costs based on specific requirements. This flexibility makes them ideal for applications requiring varying degrees of computational complexity.

Agentic capabilities

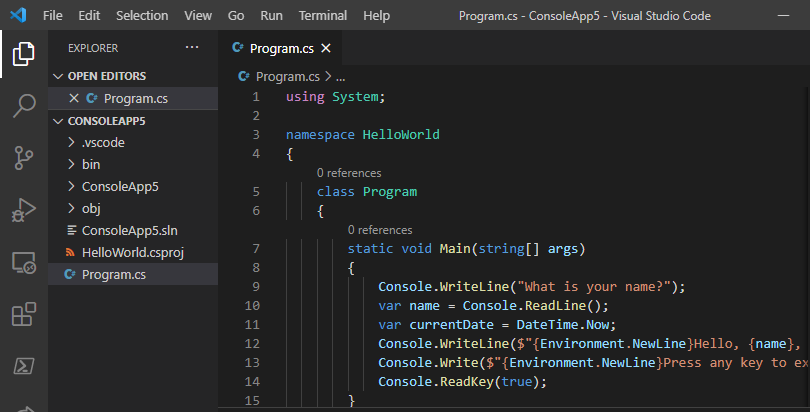

These models are specifically designed for agentic workflows, with native support for:

- Web browsing for research and accessing current information

- Python code execution in Jupyter environments

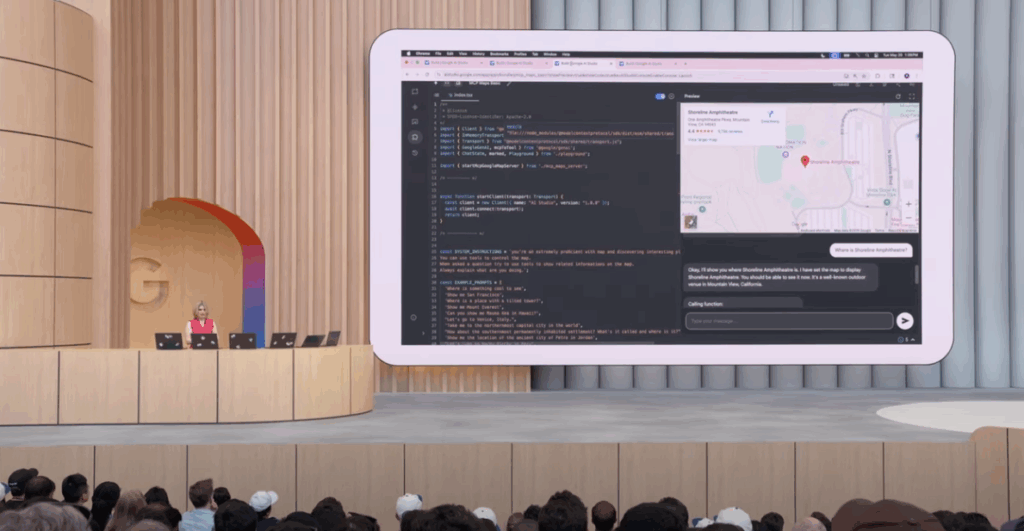

- Function calling for integration with external systems

- Structured outputs for enterprise applications

These capabilities make them particularly well-suited for applications requiring external environment interaction and complex automation. I don’t know about you, but I already have about 250 potential projects in mind 🙂

Security

OpenAI subjected their models to adversarial fine-tuning tests conducted by three independent groups to verify that the models cannot be easily compromised for illicit or fraudulent purposes. According to the article published by OpenAI, the test results demonstrated that malicious use of these models remains limited.

Chain of Thought

Regarding training strategies, an unsupervised approach to chain of reasoning (CoT) was employed for anomalous behaviors (e.g., the model doesn’t always respect constraints provided in the prompt).

However, this choice places the responsibility on developers to properly filter response content before presenting it to end users, as responses may contain language that doesn’t align with standard safety policies (commercial models, by contrast, use supervised chain of reasoning).

Advantages

The open-weight release offers significant benefits:

- Complete customization through fine-tuning

- Total control over data and infrastructure

- Reduced costs for local deployment

- Accessibility for emerging markets and budget-constrained organizations

- Transparency in implementation and decision-making processes

Disadvantages and limitations

Despite notable strengths, some limitations exist:

- Inferior performance compared to more advanced proprietary models on certain benchmarks

- Greater security responsibility for developers (e.g., prompt filtering before returning to users)

- Lack of multimodal support (text only)

- Higher hallucination risk compared to larger models

- Deployment complexity requiring specific technical expertise

License

Both models are released under the “Apache 2.0” license, one of the most widely used licenses in the open source world. This means we can use, modify, and distribute these models for both personal and commercial purposes.

GPT-OSS vs “Open” competitors

While OpenAI’s achievement is remarkable and we welcome it enthusiastically, it enters a landscape where open models already exist. This movement began with Chinese DeepSeek and continued through to Qwen’s model, released months ago with great public enthusiasm.

In the IT world, open source is a force capable of moving humanity forward—each new “open” model is a step toward increasingly accessible AI at affordable prices.

More specific benchmarks comparing these models with others will certainly emerge in the next days, and we’ll see if they truly live up to expectations and can surpass existing models. Meanwhile, you can find one of the first model benchmarks at this GitHub repo ( https://github.com/johnbean393/SVGBench). This benchmark evaluates models across knowledge, development, instruction-following, and physical reasoning.

As we well know, a model may perform excellently in one domain while being weak in another—there’s no perfect model for every context today. As developers, we must be capable of using the right model for our specific needs, perhaps integrated into a RAG application.

Sources for further reading

I’m sharing links to two articles: the first is OpenAI’s announcement post, and the second is the technical paper with detailed model specifications.

If you want to try the models, visit: https://gpt-oss.com/

Conclusions

The GPT-OSS release marks OpenAI’s return to open source after six years, demonstrating that competitive performance and accessibility can coexist. The most compelling aspects are the agentic capabilities and memory requirements, with the 20b model requiring “only” 16GB, opening possibilities for domestic use cases.

The decision to maintain an uncensored reasoning chain shifts security responsibility to developers, creating new opportunities while demanding greater technical maturity from those implementing and using these models.

GPT-OSS enters an increasingly competitive open-source ecosystem where a multi-model philosophy becomes essential: there’s no perfect model, but there’s the right one for each specific context. The real value lies in integration within broader architectures and native agentic capabilities.

This release sends a clear signal toward a future where AI will be increasingly open, transparent, and democratic. While we await the model benchmarks, all that’s left for me to do is wish you all the best, and see you in the next article!