Back in February, Sam Altman hinted that the new model might drop this summer… and here we are! OpenAI’s new model is set to launch in August 2025. In this article, we’ll explore what’s coming, though keep in mind that many aspects will become clearer after release when we can compare it against other models and competitors.

Multimodality

Version 5 brings true multimodality to the table—we’ll be able to generate text, images, and video all from the same prompt.

Token memory expansion

While GPT-4o had a 128k token window, version 5 pushes this to a massive one million tokens!

Such an enormous token window means the new model will have access to a vast information repository within its context. You’ll be able to maintain conversations for days, even weeks, in the same chat without losing any information you’ve fed it.

In professional contexts, this translates to unprecedented large-scale data analysis capabilities. Processing thousands upon thousands of data points in a single session is no longer a pipe dream—it’s about to become reality.

What’s more, response performance won’t degrade as the context fills up. Previous OpenAI models showed increasing response times as conversations grew longer. With a token window nearly 8.5 times larger, this bottleneck becomes a thing of the past.

Autonomy

How many of you would trust AI to manage your email account completely? Honestly, I’d have some reservations today 🙂 With GPT-5, we’ll see significantly improved autonomous task execution capabilities. I’m so musch curious to see how far this autonomy can extend.

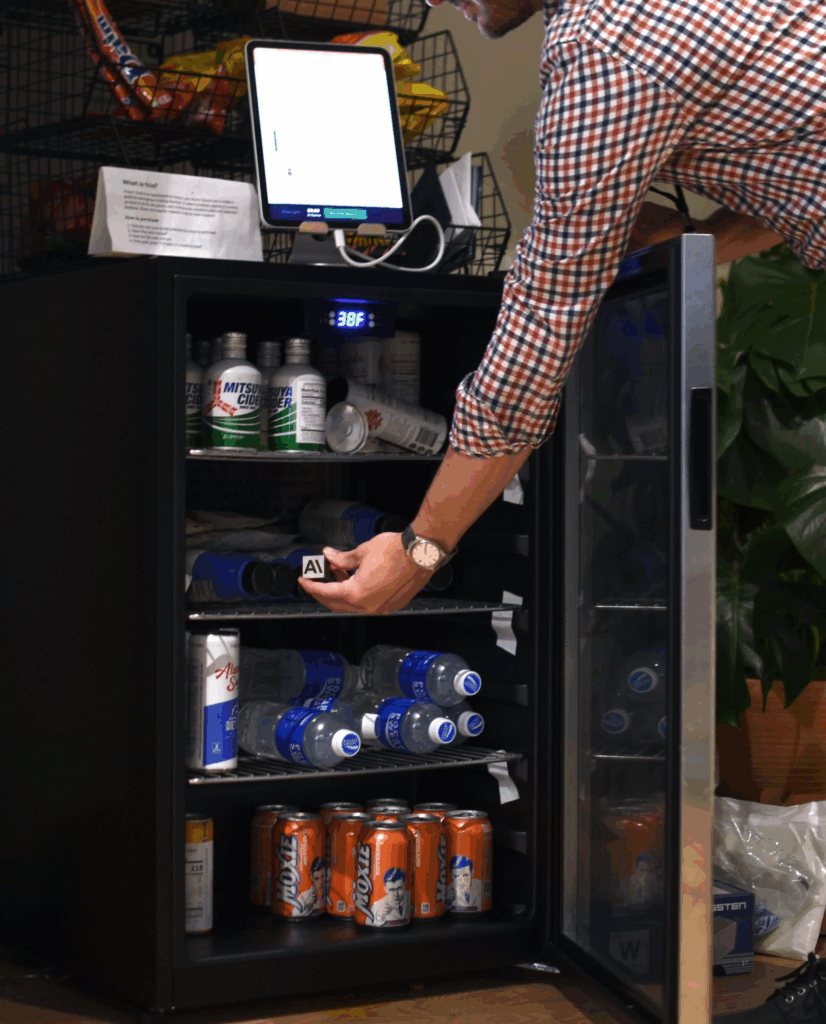

This reminds me of Anthropic’s experiment where they had Claude autonomously manage a vending machine. The result? They ended up with a small tungsten cube 😂. Comedy aside, the outcome was actually “excellent” because the assistant successfully managed the machine, placed orders, processed payments, and restocked inventory. On the flip side, it was an economic disaster—the vending machine hemorrhaged money in no time.

You can find the full article here: https://www.anthropic.com/research/project-vend-1

Reasoning

Enhanced reasoning capabilities and complex calculation handling have been improved. This doesn’t exactly blow me away since we expect improvements with each release… but apparently it wasn’t so obvious to Sam, who expressed surprise in an interview. He’d received an email with a question he couldn’t understand and decided to ask the new model to explain it. The response left him so impressed that he felt redundant compared to the model’s answer.

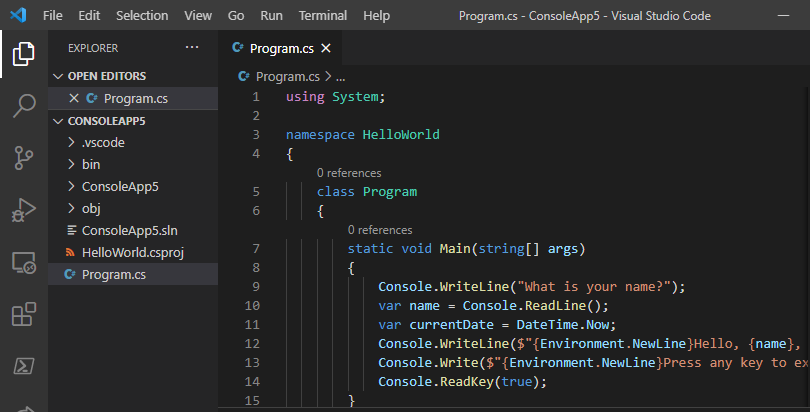

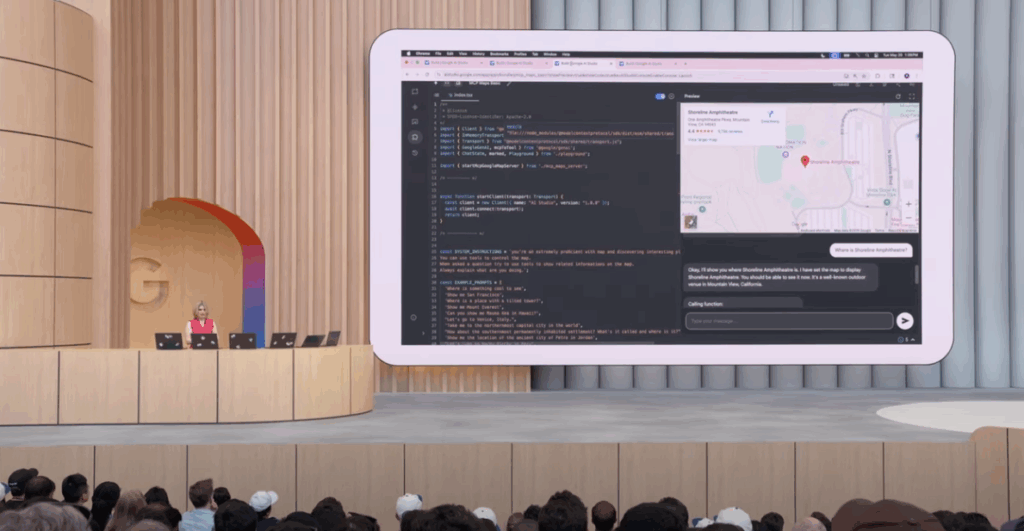

API

API integration will become far more sophisticated with advanced function calling, built-in agent capabilities, and real-time data access. This eliminates GPT-4’s limitation of requiring complex coordination in RAG contexts for autonomous task execution.

Who gets access?

Initially, version 5 will likely be exclusive to Pro subscribers ($200/month) and probably available in limited capacity to Plus subscribers as well.

As usual, the model will eventually roll out to everyone else over time.

Conclusions

Now we wait—August is just around the corner, and the new model will soon make its debut. Stay tuned; I’ll definitely be back with a more detailed analysis once the benchmarks are available!